Your company has an AI usage policy.

Great.

But a policy on paper doesn’t stop attackers, it only passes audits.

Because the real risk isn’t that someone uses DeepSeek or other low-friction AI tools.

The real risk is that they use it with a company email, a personal Gmail, or even a “work alias” like mario.dev.work@gmail.com – paired with a password they haven’t changed since 2021 and no 2FA.

And you won’t know there’s even a potential exposure until it’s too late.

Instead of waiting for evidence of abuse, we did what an attacker would do:

we went looking for our own potential data leaks.

Not by spying on employees.

But by testing our external attack surface with the same data an adversary would use.

Here’s what we found in 15 minutes of focused reconnaissance.

Phase 1: Attackers hunt fresh exposure—not history

An attacker won’t start with 2019 breaches. They want recent infostealer logs, because those credentials are most likely still valid, and tied to active sessions.

We filtered recent breach data (November, 2025 onward) for any record tied to our organization’s naming patterns, whether corporate (`*@company.com`) or personal (`first.last@gmail.com`, `dev.alias@proton.me`, etc.).

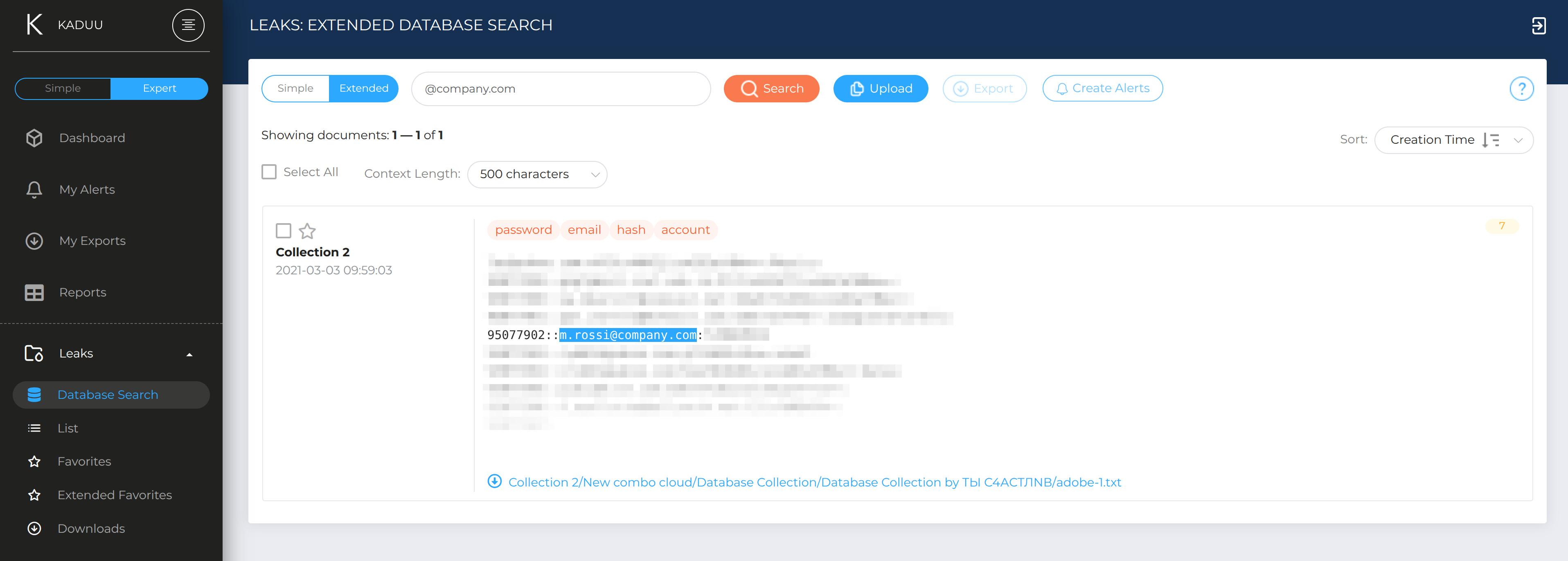

No archives. No noise. Just currently exposed credentials. So we used a perfect platoform for that job: DarknetSearch by Kaduu with the leak center tool.

Phase 2: “We rotated that password years ago—what’s the issue?”

Among dozens of recent exposures, most had already been flagged by IAM.

But one stood out: a weak, personal-pattern password.

MRossi1980!

Not random. Not temporary. A classic mnemonic root-name plus birth year.

This isn’t an anomaly. It’s a systemic behavior.

And here’s the catch: this password wasn’t just on a personal account.

It was also found—unchanged—on a company email used to register for third-party services, including AI tools.

Why? Because no one forced a password reset on those external platforms. Your corporate rotation policy doesn’t reach DeepSeek, GitHub, or that SaaS tool signed up for in 2021.

Even worse: we found the same root tied to a “dummy” work account—`m.rossi.dev@gmail.com`—created to bypass corporate SSO and access “quick” dev tools.

These shadow identities are rarely monitored, never rotated, and often packed with sensitive context.

The Lifecycle of a Potential Leak

2021: First appearance

In a historical breach: `m.rossi@company.com : MRossi1980`.

Weak, but contained within a now-retired system.

2022–2024: Surface-level compliance

Corporate accounts got stronger passwords.

But the same user kept using `MRossi1980` everywhere else—on forums, AI tools, cloud IDEs—because those services never asked them to change it.

2025: Risk lives in the shadows

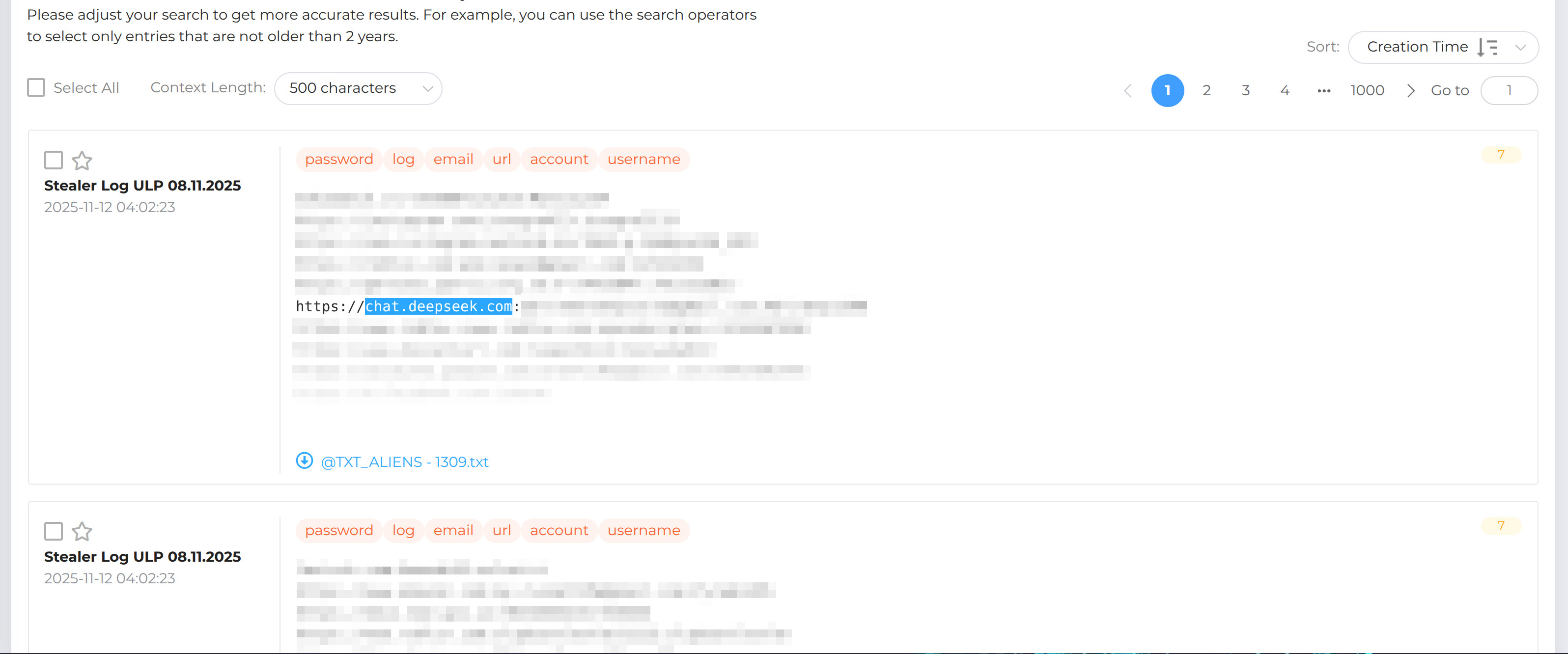

Today, a fresh infostealer log (November 2025) shows:

- Email: `rossimario808080@gmail.com`

- Password: `MRossi1980`

- Saved site: https://deepseek.com

No 2FA. No corporate visibility. No compromise—yet.

Using public OSINT (professional profiles, code commits, domain history), we confirmed this alias belongs to an active employee—without accessing private data.

I can suggest a lot of tools to do that in this dedicated section of my website: The OSINT Rack

DeepSeek allows email/password login (not just OAuth), and syncs chat history by default.

If the user ever pasted internal code, API keys, or system logic into that chat… it’s now sitting in an exposed account.

We don’t know if they did.

But an attacker wouldn’t wait to find out.

Phase 3: We stopped there – and that’s the point

We never attempted to access the account.

We never will. It’s unnecessary, unethical, and illegal.

Because this isn’t about proving actual data loss.

It’s about identifying potential exposure before it becomes a breach.

The chain is real:

- A mnemonic root persists for years.

- Corporate policy only enforces change inside the perimeter.

- Outside it, that root secures personal emails, dummy aliases, and unsanctioned AI tools.

- Those services become silent vaults of risk—until an infostealer opens them.

From Simulation to Mitigation

This isn’t an HR issue.

It’s a security design flaw.

The response should be strategic:

- Mandate password managers for all accounts—not just corporate ones.

- Inventory shadow identities: `*@gmail.com`, `*@proton.me`, etc., used for work.

- Provide approved, sandboxed AI tools—so employees don’t create risky aliases to get work done.

- Treat external email exposure as identity risk: monitor fresh infostealer data for any address tied to your org’s naming patterns.

So, for the future…

The most dangerous password isn’t the one you use today.

It’s the one you think is dead—but lives on in a Gmail alias, a forgotten SaaS account, or an AI chat history.

Real defense isn’t about hoping your policy works.

It’s about verifying—every day—that it holds up in the real world, where attackers start with a 15-minute search…

and end with your data.